How to Recognize Emotions Responsibly with AI?

Automatic feedback on successful customer service, timely support for employees, or identifying the threat of violence in healthcare work are some examples of potential applications for speech emotion recognition. In Loihde’'s TurvAIsa research project, both verbal and non-verbal cues are studied, combining text emotion analysis with acoustic signal-based speech emotion recognition. TurvAIsa is funded by Business Finland.

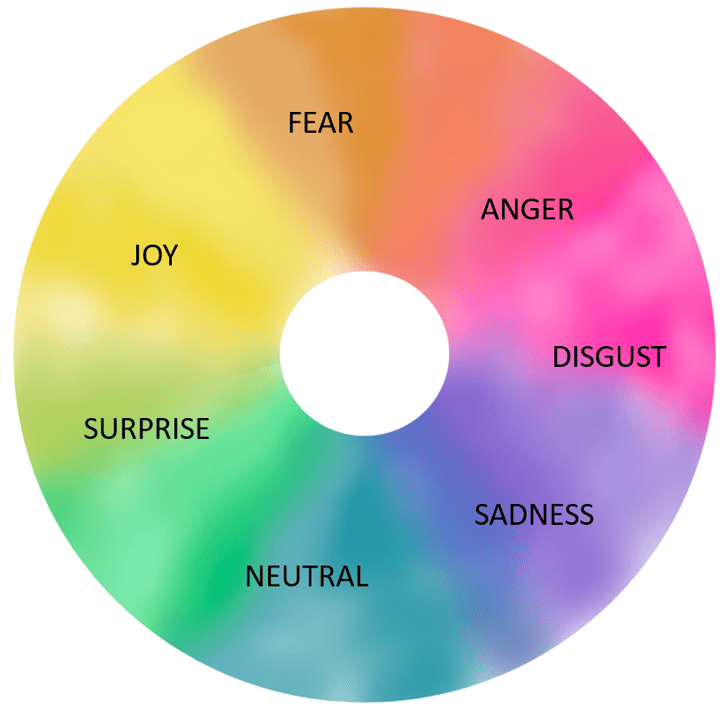

Emotion recognition in a communication situation may first seem like a fairly straightforward task, where we try to determine whether people are, for example, happy, surprised, or angry. However, after some closer consideration, various questions arise:

- Is the goal to capture the emotion or emotional state over a shorter or longer time frame?

- Emotions do not have precise boundaries, so which emotions are we trying to recognise, and are we also interested in their intensity?

- Is the aim to identify the person's internal emotional state or simply the emotions conveyed in the communication situation?

- How should the context of the situation be considered in the processing, and what about culture and language aspects?

- Will the recognition rely on facial expressions, gestures, words, speech, or some other cues, and how do we capture and record these cues?

Human communication is much more than the sum of words. It consists of both verbal and non-verbal cues that complement each other. We humans are often good at interpreting these cues, but our ability can diminish when we get tired. On the other hand, artificial intelligence doesn’t get tired, so could we use AI to recognise emotions responsibly and use that for building more intelligent customer service solutions? There are potential applications in various industries. For example, in healthcare AI could identify aggression, report it, and even alert for assistance. Different phone and chat services could benefit from emotion recognition and provide better and safer experiences for both customers and employees.

Reliability brings responsibility

In Loihde's TurvAIsa project, we approach emotion recognition from two perspectives. Speech emotion recognition aims to identify emotions from acoustic features of the speech signal, while text-based emotion analysis processes the emotional tone of spoken text. Initial results from TurvAIsa show that combining audio and text analysis significantly improves the accuracy of emotion recognition. In terms of text analysis, we can train models for specific contexts and languages using tools like large language models, such as OpenAI's ChatGPT. Additionally, we can recognise some key terms of the application, and target the analysis more precisely time-wisely. By combining speech and text emotion recognition, the reliability and consequently the responsibility of emotion recognition increases.

Know your data

Over the past months, AI with ChatGPT has become a more and more visible part of our everyday lives and businesses. ChatGPT is a large language model, but not all AI solutions require such large algorithms. Many of today's AI solutions are based on the principles of data-driven AI, where algorithms are developed with smaller, comprehensive, and high-quality datasets. Additionally, training and testing datasets can be extended with data augmentation and synthetic data, which is a growing trend in AI development.

Since AI solutions rely heavily on data, the choice of training data has a significant impact on the responsibility and ethics of the target applications. In speech emotion recognition, using human-validated data in the training guides the algorithm to recognise audible and perceivable emotions of communication situations, allowing us to better understand what we are detecting. Additionally, for transparency, it is important to be able to tell what kind of data is behind the model, and to analyse possible biases that may arise from the data. "Know your data" is a good guideline for responsible AI.

This blog post is written by our professional Laura Laaksonen.